Budget forecasts: take them with a pinch of salt

Predicting the future is hard—ask any forecaster.

On Wednesday the Chancellor presented the government’s latest Budget. The Office for Budget Responsibility (OBR)—the independent government economic watchdog—published its latest economic forecasts, including a forecast of economic growth.

The OBR was set up in 2010 and made its first official economic forecast that year, handed responsibility from the Treasury.

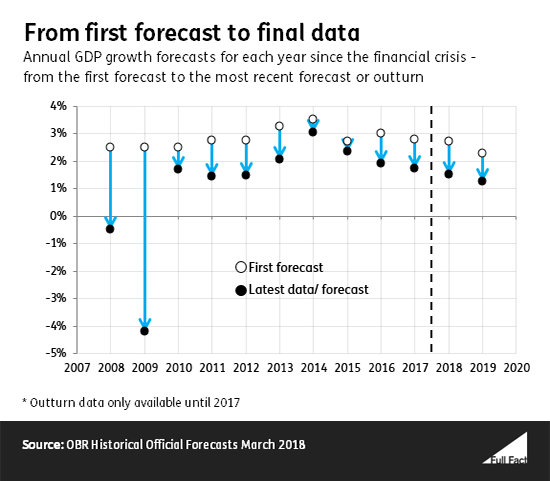

The first forecast for economic growth (GDP) each year is made around five years in advance. Since 2008, these early forecasts have been more optimistic about economic growth than what ended up happening.

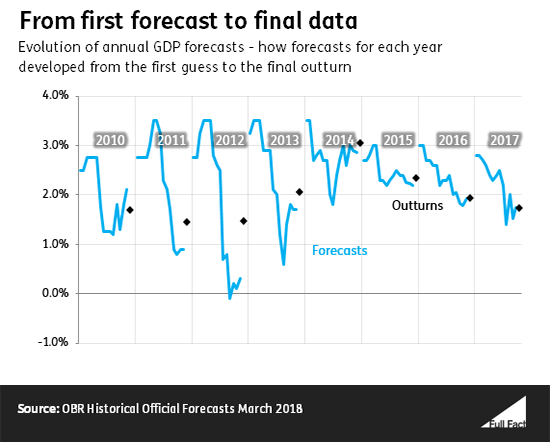

Forecasts get revised over time—sometimes upwards and sometimes downwards. The graph below shows how forecasts for each year developed. The black dots show how the actual economic outcomes turned out.

In some cases, the revisions bring the forecast closer to what actually happened with economic growth, and in others they ended up further away.

Notoriously, economic forecasters failed to predict the financial crisis in 2008 and were poor at forecasting the recovery.

Notoriously, economic forecasters failed to predict the financial crisis in 2008 and were poor at forecasting the recovery.

Honesty in public debate matters

You can help us take action – and get our regular free email

Forecasts are based on economic models

Economists use data about the past to make claims about the future. Forecasts are not the same as data or statistics about past events.

A figure from a forecast is not a fact. Often there is simply not enough data, an incomplete understanding of key relationships and an inability to foresee big shocks for forecasts to be thought of as anything other than indications.

The OBR and the Bank of England present forecasts as ‘fan charts’, showing a range of possible outcomes around a best guess.

Take the example of weather forecasts. We (usually) have a decent idea if it will rain later today.

This is thanks to mathematical models of how the weather works. Forecasters gather lots of data, look at past patterns, make some assumptions, and solve a set of mathematical equations to tell us something useful about the world.

By gathering data, making key assumptions, and solving a system of equations economic forecasters try to tell us something useful too.

Like weather forecasts, economic models are sometimes very wrong. They have a better record of predicting the impact of small changes in the near-term than large “unpredictable” events that break with the immediate past.

Models look at the past to predict the future. It follows that they are most likely to be accurate when it is reasonable to think that the future might unfold pretty much like the past. For the same reasons, it is no surprise that models are very poor at predicting turning points, like big shocks and their consequences (such as the 2008 financial crisis).

And like weather forecasts, different models and different institutions make different predictions about what’s going to happen.

The forecasts got it particularly wrong for 2011 and 2012 because the first estimates were made before the financial crisis hit the UK. They got gradually closer to the mark as more forecasts were made.

What is the point of producing a forecast?

Understanding the assumptions that have gone into economic forecasts, and their potential limitations, is what makes them a good starting point for debate and planning.

The OBR says that forecasts play an important role in helping it set policy and for helping assess the government’s progress toward targets.

In an evaluation of the Bank of England’s forecasting, its own Independent Evaluation Office talked about three purposes of forecasting. It said the Bank’s forecasts are intended to:

- Help inform an understanding of what is happening in the economy, what is likely to happen to the economy, and what the risks are around the forecasts.

- Help the Bank consider the pros and cons of different monetary policies;

- Be a way to communicate the Bank’s thoughts on the outlook and risks to the economy.

So forecasts of GDP and other economic data are useful for policy makers and provide a context for decisions and discussion—but we shouldn’t focus on a single number in the forecast and assume that it is going to be a good prediction of what will happen.

Update 23 November 2017

We updated this piece to include the November 2017 OBR forecasts.

Update 13 March 2018

We updated this piece to include the March 2018 OBR forecasts.