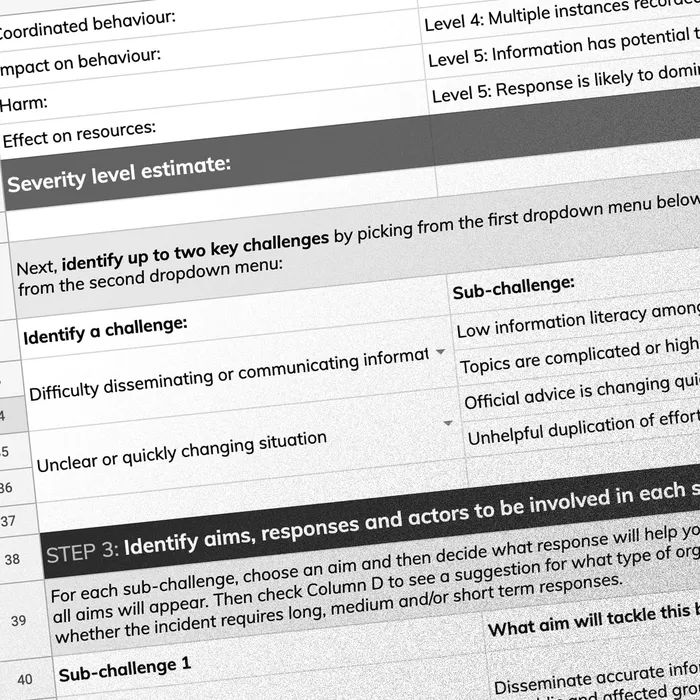

Framework for Information Incidents

A new shared model to help different organisations collaborate effectively when there is an information crisis.

The Framework is intended to be used on a voluntary and open basis, supporting collaboration between counter-misinformation actors from the technology sector, government, civil society, official information provision, the media, and others.