How does Automated Fact Checking work?

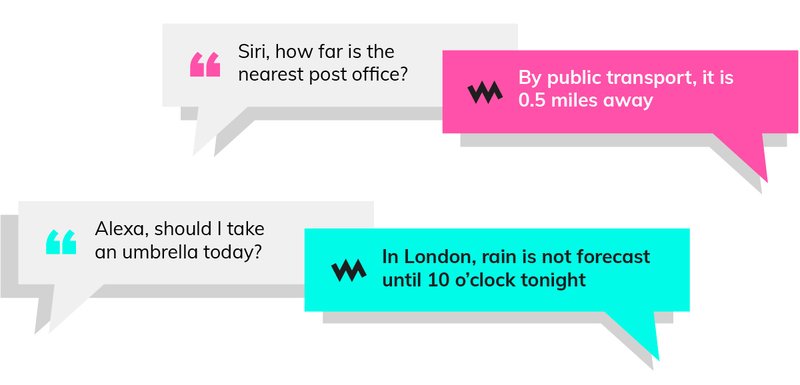

In recent years, artificial intelligence (AI) has revolutionised our lives. Many of us use smart assistants like Siri or Alexa and ask them for weather forecasts, movie recommendations or to book a restaurant for us. Perhaps less obviously, AI algorithms are also used to help with medical diagnoses, loan approvals, search engine results and almost all our interactions with social media.

So why not apply AI to fact checking? Can we use these new technologies to automatically verify what we read in the papers or see on the news?

Join 72,547 people who trust us to check the facts

Subscribe to get weekly updates on politics, immigration, health and more.

What do fact checkers actually do?

First, they decide each day what to check. This involves actively monitoring a range of newspapers, political TV programmes, radio broadcasts and social media. They identify potentially inaccurate or misleading statements from politicians, journalists and other influential people, especially where the issues at stake could cause real harm.

Second, they check to see if the claim has already been checked. If the claim has changed, or the published article is out of date, or the nature of the repetition is important then a new article may be required. But if the claim is a repeat or paraphrase of one that’s already been recently checked, there may be no need to spend time checking it again.

Third, they research the claim and the story behind it. This might involve interviewing experts, tracking down the original source of a statistic or quote and figuring out what’s really going on. They may also reach out to the person making the claim and ask for a comment or explanation.

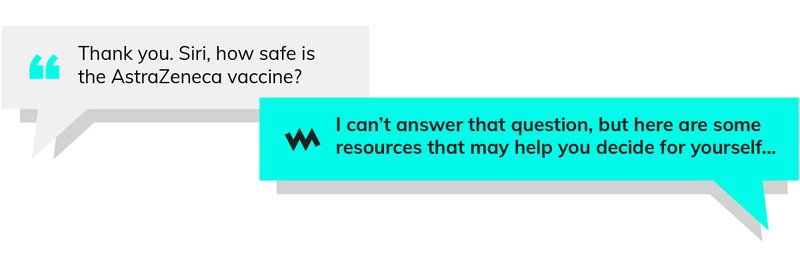

Fourth, they write the fact check article, including an explanation of how the claim is inaccurate or how the context is incomplete. They also provide clear links to evidence, helping members of the public to fully understand the claim and form their own judgements.

Finally, they may contact the person who made the misleading claim and ask them to correct the record or withdraw the claim.

All of this work takes time, so it’s natural to ask: can we automate some or all of these tasks?

How can AI and technology help?

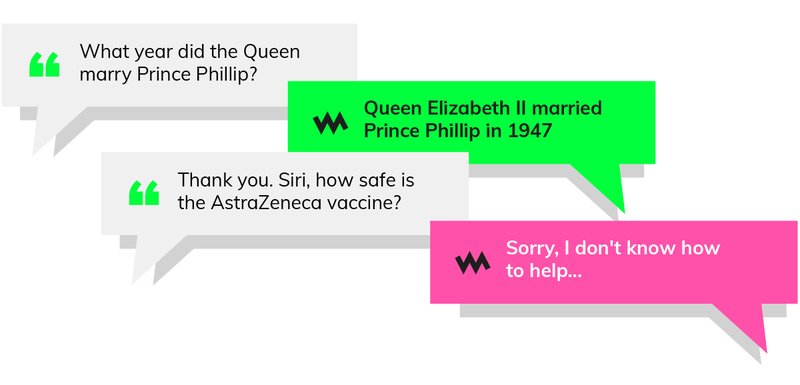

Let’s start big and consider what a fully automatic fact checking tool might look like. Ideally, it would be able to understand any question asked of it, turn to the most reliable sources of information and give a comprehensive and comprehensible answer. There have been a few academic projects that explore this, but they tend to be quite limited in scope. Some are limited to information found on Wikipedia, while others are limited to specific technical domains, such as European energy generation.

There are at least two major challenges to such a tool: first, spoken (and written) language is full of ambiguity and implicit context. When talking with other people, we assume that they already share a large part of our understanding, even if they possess some information that we do not. And second, the answers to many of the most interesting questions cannot be simply looked up in a database or a stock list of facts, but instead require some complexity and nuance to avoid a misleadingly simplistic answer.

So fully automated verification is a (very!) long way from being useful and fact checkers jobs are safe for the foreseeable future.

When asked about what tech would actually help them, fact checkers often ask for help with:

- deciding what to check each day (which includes media monitoring, choosing claims that are most 'checkworthy', finding viral trends on social media and so on);

- evidence retrieval, to speed up the research phase of fact checking by automatically finding and retrieving relevant data sources, recent reports and related claims; and

- triaging tip lines, such as prioritising incoming requests through WhatsApp channels, or even automatically replying to certain types of request.

What technology do we use at Full Fact?

The kinds of technology discussed above are very specialist and so off-the-shelf software is rarely available. So at Full Fact, we continue to develop our own tools to support fact checkers. We use these within Full Fact to help produce and monitor fact checks, and we make the tools available to other organisations such as Africa Check.

Our Candidates tool helps users find claims that may be worth checking. It works by analysing tens of thousands of sentences every day from newspapers, parliamentary reports and social media, and letting fact checkers search over them. For example, we can search for “all claims made by Labour politicians that contain a quantity”, or “all claims made by Conservative politicians that mention the NHS”. In this way, experienced fact checkers can help uncover recent claims worth investigating, whether or not they made the headlines.

Our Digest tool also analyses tens of thousands of sentences and compares them to each of our recently published fact checks. The most likely matches for each fact check are summarised for review. Where appropriate, we can then contact journalists or politicians who have repeated a claim that we have already checked, without having to carry out a time-consuming fact check from scratch.

Our Live tool generates real-time transcripts of broadcasts including Prime Minister’s Questions, the Today program and other political news programs as required. This incorporates the same claim analysis tools found in Candidates (above) and also allows fact checkers to focus on checking facts without also having to manually transcribe speeches as they are made.

Let’s return finally to automated verification. We are in the process of creating a prototype of a new automatic “robochecking” tool. As discussed earlier, the challenges include ambiguous language and limited access to trustworthy data, and these challenges remain. But even if only a few sentences are straightforward enough to be processed by the tool, and even if only a few of the answers can be found in trustworthy data sources, these tools can be useful. For example, a detailed monitoring of everything politicians say could be used as a guide for fact checkers to dig deeper into contentious claims, even if the tool cannot with absolute confidence say that the claim is true or false.

All of these tools require expert — human! — fact checkers to make decisions throughout the process and to communicate the final results to our audience.

For a deeper review of these issues, please see our recent collaborative paper: [2103.07769] Automated Fact-Checking for Assisting Human Fact-Checkers (arxiv.org)