Towards a common definition of claim matching

After Full Fact publishes each fact check, we keep monitoring the media to see if anyone repeats misleading claims. Where appropriate, we can then contact journalists or politicians who have repeated a claim we’ve already checked, without having to carry out a time-consuming fact check from scratch. Given the large volumes of media that may contain such matches, we are developing tools to help. Others, including academics and big tech companies, are working on similar problems.

In this blog post, we present a formal definition of what we mean by claim matching and relate this to others’ work and explore the scope of the problem. It is not intended as a comprehensive literature review, and we do not discuss the details of algorithms that may be used to find claim matches. Instead, we provide a framework that we hope will be useful to compare, synthesize and direct the variety of work being done in this field.

Join 72,547 people who trust us to check the facts

Subscribe to get weekly updates on politics, immigration, health and more.

Claim matching as shared truth conditions

We define a claim as a statement about the world that is either true or false. We are primarily interested in claims which we can determine are in fact true or false, so we don’t try to verify claims that are predictions, hypotheticals or personal beliefs.

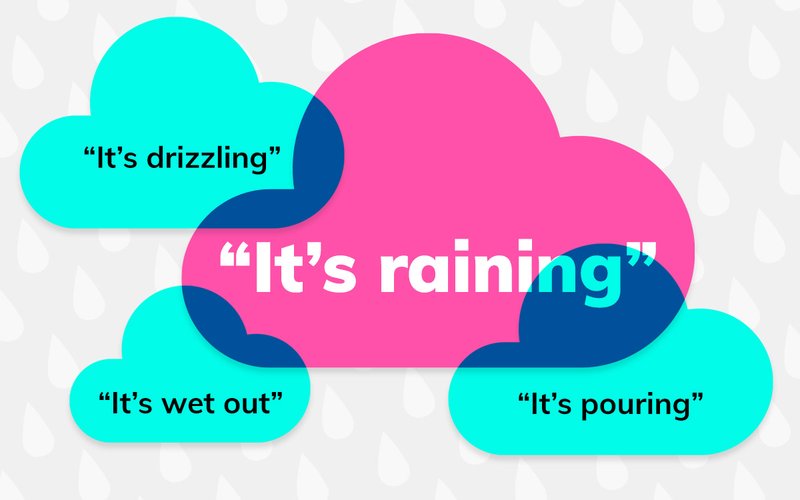

A truth condition is the condition under which a statement is true; or put another way: what it would take for the claim to be true (Wikipedia). For example, the claim that “it is raining in London” is true under the condition that it is in fact raining in London.

We define two claims as matching claims if they have the same truth conditions. A particular state of the world might make them both true or both false, but there is no possible state where one is true and the other false. Thus learning that one claim is true tells you that the other claim must also be true (and the same holds for ‘false’).

Given a set of fact-checked claims V, a set of unchecked claims U and a function t(c) that returns the truth condition of a claim c, we can define our claim matching task as identifying u and v such that

t(v)=t(u) for some v∈V, u∈U.

While finding a general algorithm to identify truth conditions is a hard problem, the concept provides a useful way to consider what exactly we mean by claim matching.

Example claim matching tasks

Using the above definitions, we can compare and contrast different approaches to applied claim matching. To define a claim matching task, we must define V (the set of fact-checked claims) and U (the set of unchecked claims). The nature of these two sets determines a lot of the task in practice.

At Full Fact, we match claims found in newspapers and elsewhere to our database of fact checks. If a checked claim is repeated by a journalist or politician, we can request a correction from them, slowing the spread of misinformation.

- V = Full Fact fact checks

- U = sentences from digital newspapers, Hansard reports, TV/radio transcripts, some social media

On any given day, Full Fact may compare 40-80 recent fact checks with up to 100,000 sentences from the media. Only a few matches are likely to be found, so this naturally forms a binary classification task with very unbalanced classes.

One project of Meedan (Kazemi et al 2021) matches WhatsApp tips with Indian fact checks across 5 languages. If an incoming tip matches a published fact check, then the fact check can be automatically sent out as a reply, potentially slowing the spread of misinformation.

- V = fact checks from several organisations

- U = tips from WhatsApp users

This automation maximizes response times while minimising costs.

Part of the CheckThat! Lab (Barron-Cedeno et al 2020) defines a “Verified Claim Retrieval” task to match tweets to fact checks and then rank them to find checks that are helpful to verify the tweets.

- V = fact checks from Snopes (10,375 checks)

- U = tweets mentioned in one of the fact checks (1197 tweets)

Because of the way the data was selected, every tweet should be assigned to one fact check (or sometimes a few). See Shaar et al (2020) for one response to this challenge, which also extends the analysis to include reports by Politifact on political speeches and debates:

- V = fact checks from Politifact (768 checks)

- U = reports of political debates and speeches (78 reports)

Recent work from Sheffield University (Jiang et al 2021) searches for tweets and matches them to fact checks from multiple IFCN fact checkers, focussing on UK/US Covid-19 related fact checks. The paper also describes an “evidence based misinformation classification task”, which uses IFCN fact checks as evidence of misinformation.

- V = fact checks from IFCN members in English, about Covid-19 (90 checks)

- U = tweets retrieved using the fact checks as search terms (1800 tweets)

Lastly, search engine results from Google and Bing can highlight relevant fact check articles that appear to match a claim that is being searched for (e.g. Wang et al., 2018; Bing 2017). Google’s Fact Check Explorer is a convenient tool to access fact checks from many IFCN organisations, but makes no attempt to find new matches itself.

In summary, the defining features of these tasks include:

- the set of checked claims to match against (which may be limited by topic, organisation, date, language etc.)

- the nature of the source texts containing unchecked claims (which may be tweets, newspaper articles, debate transcripts and may also be pre-filtered by language, topic, etc.)

- assumed frequency of matches (e.g. is every unchecked claim assumed to be related to exactly one verified claim? Or are most not related to any verified claims?)

- the degree of automation. Will there always be a human-in-the-loop, or will the predictions be used directly with no further verification?

Some challenges to claim matching in practice

Ambiguity: all claims are ambiguous. Many words have multiple meanings (homophones) and the same meaning can be expressed with different words (synonyms). So in practice, we may accept that two claims are matching if their truth conditions are approximately equal.

Time dependency: The truth condition of many claims varies over time, meaning that a claim may be true one day but false the next. For example, the claim “inflation is 2.5%” has been true in the UK for 5 months out of the last 10 years.

Asymmetry: For some tasks, we may relax the definition given earlier into a directional form such that learning that one claim is true tells you the other is true, but not vice versa. If the truth conditions of the first claim are a subset of the truth conditions of the second claim, then it is possible for the first claim to be false and second true, but it is impossible for the second claim to be false and the first true. If t(v)⊃(tu) then we can be sure that the unverified claim u is true if and only if the fact checked claim v is true. Thus we can re-use the existing fact check article to confirm or reject claim u. An example of asymmetry is when one claim is more precise than another, such as claiming inflation is “about 3%” vs “exactly 2.9%”.

Context: Often, the truth condition of a claim can only be identified when the context is considered. This may mean considering the previous sentence or two, or sometimes the whole article. Shaar et al (2021) explore how co-reference resolution, local context and global context can all help with identifying matching claims.

Related problems

Here, we briefly discuss some related tasks and methods, in order to put claim matching into context.

Semantic similarity attempts to measure how similar in meaning two pieces of text are, but not all differences in semantics are equally important in claim matching. For example, “Boris Johnson stated that inflation fell to 2%” and “Emmanual Macron stated that inflation fell to 2%” are semantically very similar, but the truth conditions of the two claims are independent so we would of course not consider them as matching claims.

Information retrieval: using conventional indexing methods, such as term frequencies or n-grams, will tend to match claims that contain similar terms even if the underlying meaning, and truth conditions, are quite different.

Paraphrase detection: Sometimes, two matching claims may differ by only a few words that share meanings (i.e. synonyms), or they may be formed from the same phrases expressed in a different order. But in other cases, matching claims may be expressed in arbitrarily different ways, and even in different languages, such as with Meedan’s work mentioned above, limiting the value of paraphrase detection as a complete solution.

Textual entailment: If a reasonable person reading claim a would also believe claim b we say a entails b, also written a⇒ b. The bidirectional form, a⇔ b means that learning a is true leads one to believe that b is true and that learning b is true also leads one to believe that a is true. This will tend to happen if claims a and b have the same truth conditions, so bidirectional textual entailment provides an alternative way to define claim matching.

Conclusions

In order to bring together a range of work on claim matching, we have presented a formal definition of when two claims can be said to match, independently of the specific data sets or algorithms used, and have outlined various related tasks and approaches. In a future post, we will discuss the tools we have developed to perform claim matching at Full Fact and the impact they are having.

References

Barron-Cedeno, A., Elsayed, T., Nakov, P., Martino, G. D. S., Hasanain, M., Suwaileh, R., Haouari, F., Babulkov, N., Hamdan, B., Nikolov, A., Shaar, S., & Ali, Z. S. (2020). Overview of CheckThat! 2020: Automatic Identification and Verification of Claims in Social Media. ArXiv:2007.07997 [Cs]. arxiv.org/abs/2007.07997

Bing (2017) Bing adds Fact Check label in SERP to support the ClaimReview markup

Bing adds Fact Check label in SERP to support the ClaimReview markup

Jiang, Y., Song, X., Scarton, C., Aker, A., & Bontcheva, K. (2021). Categorising Fine-to-Coarse Grained Misinformation: An Empirical Study of COVID-19 Infodemic. ArXiv:2106.11702 [Cs]. arxiv.org/abs/2106.11702

Kazemi, A., Garimella, K., Shahi, G. K., Gaffney, D., & Hale, S. A. (2021). Tiplines to Combat Misinformation on Encrypted Platforms: A Case Study of the 2019 Indian Election on WhatsApp. ArXiv:2106.04726 [Cs]. arxiv.org/abs/2106.04726

Shaar, S., Martino, G. D. S., Babulkov, N., & Nakov, P. (2020). That is a Known Lie: Detecting Previously Fact-Checked Claims. ACL. arxiv.org/abs/2005.06058

Shaar, S., Alam, F., Martino, G. D. S., & Nakov, P. (2021). The Role of Context in Detecting Previously Fact-Checked Claims. ArXiv:2104.07423 [Cs]. arxiv.org/abs/2104.07423

Xuezhi Wang Cong Yu Simon Baumgartner Flip Korn (2018)

Relevant Document Discovery for Fact-Checking Articles. The Web Conference 2018, pp.525–533 doi.org/10.1145/3184558.3188723