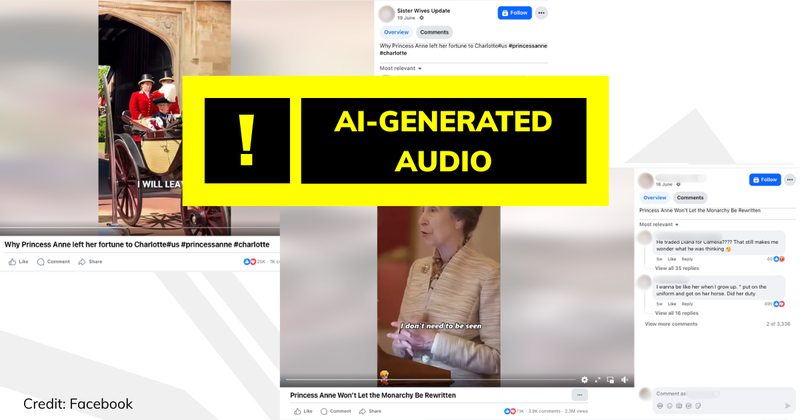

Two videos circulating on social media appear to share recordings of Princess Anne making a number of claims about Queen Camilla and saying she will be giving her inheritance to Princess Charlotte. But these are not genuine recordings and have been made with artificial intelligence.

In one clip, a voice that is meant to be Princess Anne says: “I will never stand by in silence while someone tries to take control of the Royal Family”, in an apparent reference to Queen Camilla. In the other clip, Princess Anne supposedly says she is leaving her inheritance and royal title to Princess Charlotte to allegedly protect her from her “step-grandmother”.

Both of the videos, which each have over a million views, also include background music and show footage of Princess Anne and Queen Camilla, with one also featuring clips of Princess Charlotte. Comments on the videos suggest people believe the audio to be real. A comment on the first clip says: “Love Princess Anne. She tells it as it is.” While one on the second clip says “Anne is so smart!!! Good for her and her thinking”.

But these are not genuine recordings of Princess Anne. They feature deepfake audio clips made using artificial intelligence. All of the video clips we took a closer look at, however, were genuine footage, and we could see no signs the visuals had been altered with AI.

Deepfake audio

Dr Dominic Lees, convenor of the University of Reading’s Synthetic Media Research Network told Full Fact that these videos feature “compilations of clips from the Princess Royal's public engagements over a voice clone”.

Voice cloning refers to the mimicking of a person’s voice using AI that identifies speech patterns in a genuine sample of them speaking. Text-to-voice software can then deliver any text in this synthetic voice.

Dr Lees adds: “They have the tell-tale hallmarks of ‘deepfake audio’: a quality of voice recording that sounds like it has been made in a completely soundproofed studio; lack of breaths and other natural attributes of human voice; unnatural intonation; and inappropriate music added in order to obscure these deficiencies.”

He previously told us that background music is “frequently a technique by fakers used to hide the sound imperfections in their work” when commenting on another example of deepfake audio we wrote about.

Moreover, we could find no credible reports of Princess Anne ever having made these comments relating to Queen Camilla or Princess Charlotte.

Deepfake audio clips are becoming a common form of misinformation we see online. We’ve previously written about examples where the voices of Prime Minister, Sir Keir Starmer, and President Donald Trump were cloned. They can be particularly challenging to debunk because they don’t include more obvious visual mistakes that can be used to spot AI-generated images and videos.

While there are several online tools that claim to be able to tell whether an audio clip was generated using AI, we have found these don’t work consistently, so we currently don’t cite them in our articles.

You can find out more about analysing and identifying both AI-generated video and audio in our guide.