Revealed: how academics are being deepfaked on TikTok and Instagram to promote supplements

On 11 August this year Professor David Taylor-Robinson received an email from a colleague at the University of Liverpool, where he works. “Are you aware you are on TikTok?” the email said.

Professor Taylor-Robinson was not aware. He’s a children’s public health doctor with a particular interest in inequality, and he is not on TikTok.

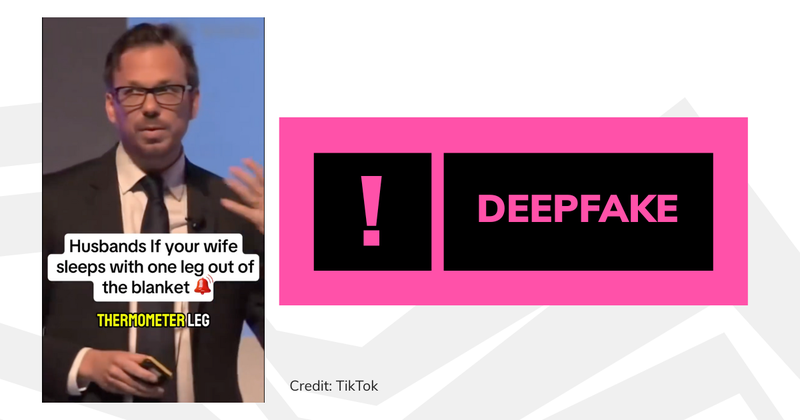

But there he was. Or a version of him was. Because what he found was footage of his real appearance at a Public Health England (PHE) conference subtly altered to make him talk not about the north-south health divide, but about “thermometer leg”.

As the fake Professor Taylor-Robinson explained, this was supposedly a symptom of menopause in which women who become too hot at night extend one leg beyond the blanket.

The fake professor had a lot to say about the menopause, in fact. He’d recorded several videos including some based on a select committee appearance the real professor made in May this year. This was also surprising, because the real Professor Taylor-Robinson isn’t a menopause expert at all.

It turned out he wasn’t alone. A Full Fact investigation has found that a series of social media accounts have been using AI-generated deepfakes of real doctors and academics—including Professor Taylor-Robinson—to promote health products with bogus endorsements.

The result is a growing wave of AI-driven misinformation that risks damaging the reputations of those it impersonates, and could lead to people making decisions about their health based on fake claims.

Join 72,953 people who trust us to check the facts

Sign up to get weekly updates on politics, immigration, health and more.

Subscribe to weekly email newsletters from Full Fact for updates on politics, immigration, health and more. Our fact checks are free to read but not to produce, so you will also get occasional emails about fundraising and other ways you can help. You can unsubscribe at any time. For more information about how we use your data see our Privacy Policy.

TikTok at first did not remove them

Professor Taylor-Robinson notified his manager and the comms team at the university, which reported the content to TikTok, only to be told that no violations were found.

He and his children reported the posts to TikTok, which replied a couple of days later saying the videos did break its community guidelines. Thenceforth, their visibility would be restricted and they would not be eligible for recommendation by the algorithm—but they were not taken down. Soon afterwards, the Health Foundation, which had heard about Professor Taylor-Robinson’s predicament, contacted Full Fact on his behalf.

“One of my friends said his wife had seen it and was almost taken in by it, until their daughter said it’s obviously been faked,” he told Full Fact. “So people who know me could have been taken in by it. That is concerning.”

To be clear, we’ve not checked the actual health claims in these videos as part of this investigation. The evidence base for supplements is a vast and often confusing landscape. But it’s safe to say that fabricated testimonials from AI-generated ‘experts’ are not a safe source of health advice. Nor would you expect people to rely on this advice when deciding how to spend their money.

He wasn’t the only one

Looking more closely at the account behind the videos—@better_healthy_life—we were able to identify other examples of deepfaked academics and public health leaders. Eight videos showed the former chief executive of PHE, Duncan Selbie, also supposedly talking about menopause.

When Full Fact contacted Professor Selbie, he was amazed. He doesn’t use social media, and hadn’t seen the videos before. “I thought what you sent was an amazing imitation,” he said. “Although the content was total nonsense. It’s a complete fake from beginning to end.”

The Selbie videos seem to be based on a talk he gave at the same PHE conference, suggesting somebody had found him and Professor Taylor-Robinson together. But despite admiring their technical accomplishment, he wasn’t at all pleased that they existed. “It wasn’t funny in the sense that people pay attention to these things,” he told us.

Another video appears to be a crude deepfake of the Russian economist Natalia Zubarevich, in which she talks with both American and British accents, again entirely about menopause. And there are others that may be deepfakes of real people who we have not yet been able to identify.

There were even what appear to be deepfake videos of Dr Aseem Malhotra, whose claims about Covid vaccines we have fact checked a number of times before.

TikTok finally takes the video down

None of these videos matched the numbers reached by Professor Taylor-Robinson’s talk about thermometer leg, however. It had accrued more than 365,000 views, 7,691 likes, 459 comments and 2,878 bookmarks by the time @better_healthy_life was permanently banned, after our email to TikTok on 24 September.

TikTok told us it didn’t take the posts down earlier because of a moderating error.

The comments below the thermometer leg post show how convincing these fakes can be. “OMG that’s so me,” said one. “It sounds good to try these but expensive,” said another. “Exactly what I’m going through,” said a third.

TikTok admitted to us that it had made a mistake in not deleting the posts sooner. It told us it eventually removed them for breaching its impersonation and misinformation policies. It also told us it requires users to label AI-generated content.

A series of deepfakes pushing supplements

Anyone who watched to the end of the thermometer leg video will have heard the fake professor say this:

One solution I recommend: probiotic for women in menopause from Wellness Nest. It’s a natural probiotic from ayurvedic medicine. It features ten science-backed plant extracts including turmeric, black cohosh, DIM, moringa, specifically chosen to tackle menopausal symptoms. Women I work with often report deeper sleep, fewer hot flushes, and brighter mornings within weeks. If you’d like to explore it, I’ve included the link in my profile. And husbands, share this with someone you love. She needs your understanding now more than ever.

Ultimately, this is the point these videos generally come to: follow the link in the account’s home page to buy remedies (from a US supplements company called Wellness Nest) that it claims will help with a variety of symptoms. The remedies often include a Himalayan ooze called shilajit that people stir into hot water. And sure enough, the website at the end of the links does sell such products.

The @better_healthy_life account is not the only one using deepfakes to promote health supplements. In July, the French newspaper 20 Minutes reported that a number of prominent French people had been deepfaked in a similar way, with links to the same company. An LBC investigation in August found links to Wellness Nest products on deepfake videos of the British celebrity doctor, the late Dr Michael Mosley, and reported that the influencer Dr Idrees Mughal (known as ‘Dr Idz’) had been deepfaked too.

Other deepfakes have seen fake experts promote health supplements unrelated to Wellness Nest.

For example, the Mirror reported in September that another doctor had been a victim of a similar fake, promoting a different supplement seemingly not from Wellness Nest. In November, Marion Nestle, Professor Emerita of Nutrition at New York University, said that she had also been deepfaked in Instagram posts promoting health products.

And last year, the BMJ reported that ITV’s Dr Hilary Jones had been deepfaked too. A clip of this still appears on Facebook, though with a label showing it has been fact checked.

Doctors who’ve been videoed taking part in popular health podcasts are obvious targets. For example, when we checked last month we found TikTok posts featuring the Stanford urologist Professor Michael Eisenberg, Dr Aditi Nerurkar (a stress specialist at Harvard) and Dr Mary-Frances O’Connor (a research psychologist at Arizona University) that were all fake, according to the people themselves.

Dr Sean Mackey, a professor of anesthesiology at Stanford who was also deepfaked on TikTok, told us: “These deepfakes represent a public health threat. Deepfaked endorsements don’t just dupe consumers; they risk real harm when people abandon proven therapies.” (After we contacted TikTok about these posts, it took them down as well.)

Spreading far and wide

Nor is the problem limited to TikTok. When we searched for links to the Wellness Nest website, we found an account on Facebook called WellnessNest UK, which has posted many videos that look like deepfakes of prominent people including Dr Mosley and Professor Tim Spector. An account called WellnessNest Health Hub has what looks like a deepfake of the psychiatrist and sleep specialist Dr Pierre Philip.

Instagram accounts with the handles @wellnessnest_us and @nest wellnest have posted apparent deepfakes of Dr Mosley, a marketing professor, Scott Galloway, and others. On X we found accounts with links to wellnessnest.co posting what look like deepfakes of the sleep scientist Dr Matthew Walker and the mental health podcaster Mel Robbins.

This account on YouTube has posted many videos that look like deepfakes, including one that looks like the nutrition author Dr William Li, and another that looks like Dr Mackey.

We asked all the social media companies involved whether they were aware of videos like these and what they were doing about them.

Google, which owns YouTube, told us: “All content uploaded to YouTube must comply with our Community Guidelines, regardless of how it is generated. If we find that content violates a policy, we take action.” It also told us that it had added transparency labels to the videos on the account we flagged to make it clear that they are “altered or synthetic content”.

Meta, which owns Facebook and Instagram, told us it removes harmful misinformation, including examples that promote miracle cures—although at the time of writing we cannot see that any enforcement action has been taken against the accounts and posts we brought to their attention. We have not heard back from X at the time of writing.

What is really going on?

We’re not sure why social media is suddenly covered with deepfake videos promoting supplements.

While some of the accounts sharing deepfakes use the name “Wellness Nest” in their account handle, or link to the company in their bio, this does not mean that they are operated by Wellness Nest itself.

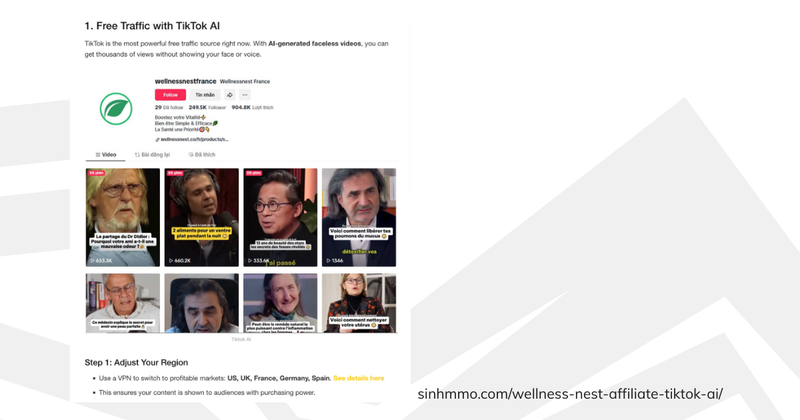

Wellness Nest does pay people to share links to its website, and thereby generate sales of its products, in a practice known as “affiliate marketing”.

Many of the links on the accounts we’ve seen posting deepfakes do go to the Wellness Nest website, where you can buy its products. Some also look like they may be affiliate links, because the web address includes the sequence “?ref=” followed by a referral code. (This is a common way to track where visitors to a website have come from, and therefore who sent them, in order to know who should be paid for the referral.)

We can’t say for sure whether these links are definitely affiliate links as part of a formal programme. But they do link directly to the Wellness Nest website. We don't know whether all Wellness Nest affiliates sign agreements with the company directly, or whether it also manages some of them through a third party.

When we contacted Wellness Nest, it told us: “These affiliate accounts are 100% unaffiliated with Wellness Nest.”

It also shared a blog it published in response to the 20 Minutes story in July, which said:

Wellness Nest has never used AI-generated content, never paid celebrities, and never claimed our Shilajit can cure or treat any medical condition…

As our community grows, more content creators and affiliates have started sharing their experience with Wellness Nest. While we truly appreciate their support, we’ve also noticed that some promotions go too far. We want to be clear: Wellness Nest has a strict affiliate policy and detailed guidelines on how our products should be promoted - including what not to say. Overclaiming, misleading benefits, or referencing unverified medical outcomes is strictly against our terms.

Whenever we identify a violation, we reach out to the creators directly and ask them to take the content down. That said, we are a small team - and unfortunately, we cannot control or monitor affiliates around the world.

This statement leaves a number of unanswered questions. When we contacted Wellness Nest, we asked whether any of the links used by the accounts publishing deepfakes were part of its affiliate programme, or had generated any sales. We also asked how Wellness Nest enforces the rules of its affiliate scheme, and whether it has terminated any agreements with affiliate partners because they had posted deepfakes. Wellness Nest has not told us.

We also asked whether Wellness Nest was aware of a blog we found, which explains in detail how it says people can earn money from the company by generating and sharing deepfake videos. This links to supposed guides to getting around the social media platforms’ detection efforts, choosing suitable people to impersonate, generating effective scripts in different languages and using AI to mimic real voices. Wellness Nest did not reply to this question either.

TikTok may have finally taken down the video of Professor Taylor-Robinson, but many other deepfakes remain, on multiple platforms, still attracting likes, comments and shares.

Indeed, now that everybody with an internet connection can make videos showing any expert saying anything they want, this may be just the beginning of a global struggle to keep track of who the real experts are.

Full disclosure: the Health Foundation has funded Full Fact’s health fact checking since January 2023. We disclose all funding we receive over £25,000 and you can see these figures here. (The page is updated annually.) Full Fact has full editorial independence in determining topics to review for fact checking and the conclusions of our analysis. Full Fact also works with Meta on its Third-Party Fact Checking programme, and has received funding from Google.