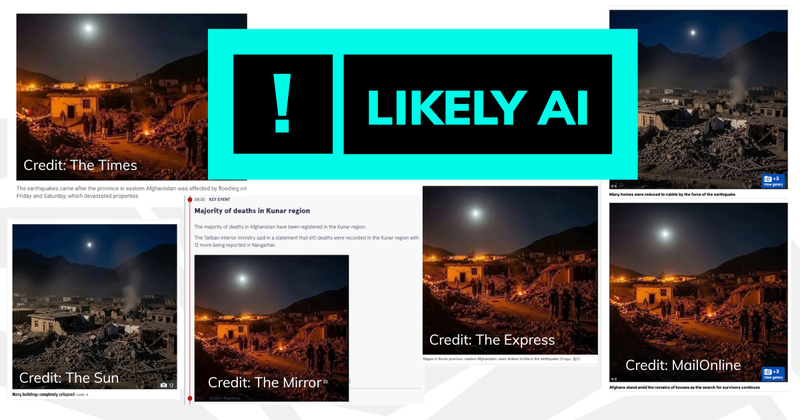

‘Afghanistan earthquake’ images used by major UK media outlets were likely made with AI

Five major UK media organisations shared images that were very likely made with AI in their reports on the recent earthquake in Afghanistan.

The MailOnline, Daily Express, Mirror and The Sun all used two images, and credited them to the social network X. The Times also used one of these images but didn’t credit it to a source. All of these outlets have now removed the images, after Full Fact got in contact.

The two images both show damaged buildings, including those reduced to rubble, with mountains and a moon-lit sky in the background. One of the images is also illuminated by orange light, seemingly from small fires, and shows a number of people standing in small groups.

There is strong evidence to suggest that both images were created or at least edited with AI. The images have been flagged by Google Lens as having been “made with Google AI”, while an AI expert told us it was likely the images were fully AI-generated.

However it is difficult for us to say with certainty that any given image was created with AI—we’ve written more about the challenges of identifying AI-generated content here. And it’s possible that some parts of these images are real, and do show the aftermath of the earthquake, but that AI has been used to alter or augment them in some way.

We’ve not been able to find the original source of the images, which have been widely used by overseas media as well.

The Afghan broadcaster Shamshad News appears to have been one of the first media outlets to use the images, which it posted on X within hours of the earthquake, but it’s unclear if it in turn obtained them from elsewhere and if so what the original source of the images was. We’ve contacted Shamshad News about the images, and will update this article if we receive a response.

Join 72,953 people who trust us to check the facts

Sign up to get weekly updates on politics, immigration, health and more.

Subscribe to weekly email newsletters from Full Fact for updates on politics, immigration, health and more. Our fact checks are free to read but not to produce, so you will also get occasional emails about fundraising and other ways you can help. You can unsubscribe at any time. For more information about how we use your data see our Privacy Policy.

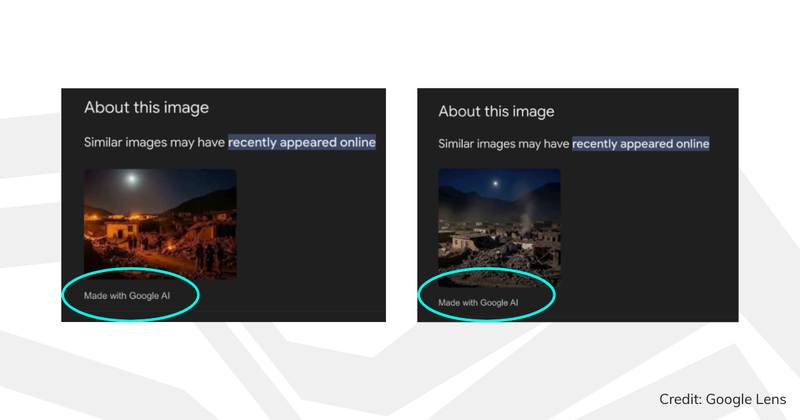

‘Made with Google AI’

When we searched these two images using Google Lens and then selected the ‘about this image’ tab, a note under each of the images said it was “made with Google AI”.

According to Google, these images both contain the SynthID watermark, which means Google AI has been used to either process, edit, or create the image.

Google calls SynthID an “invisible digital watermark” that is embedded “across Google’s generative AI consumer products”. While it is invisible to the human eye, the watermark remains detectable even if the quality or size of the picture is changed.

We’ve written in the past about other images that contained a SynthID and were identified as “made with Google AI”. A Google spokesperson previously told us in relation to a picture supposedly showing the aftermath of the LA fires that it was “not possible to confirm how or to what degree AI was used to generate or modify the image”.

When we showed these latest images to Professor Hany Farid, who specialises in digital forensics, misinformation and image analysis at the University of California, Berkeley in the US, and is chief science officer at GetReal Security (a cybersecurity company focused on preventing malicious threats from generative AI), he confirmed the presence of a SynthID in the images, which he said was “highly compelling” evidence these images were edited or generated with AI.

He added: “In looking [in] more detail at the SynthID watermark, I think it is most likely that these images are fully AI-generated.”

Media responses

The images were published by several media organisations with captions about the magnitude 6.0 earthquake that hit eastern Afghanistan on 31 August, killing more than 800 people mostly in the mountainous Kunar region.

When Full Fact contacted The Times, Daily Express, MailOnline, Mirror and The Sun about our findings, all of the outlets proceeded to remove the images from their coverage.

A note added to the Mirror live feed says: “A previous version of this article included photographs which were later discovered to have likely been created by AI. These have since been removed.”

The MailOnline article was updated to say: “An earlier version of this article contained two images, now removed, that were sourced from an established Afghan news website but which we have since been informed were AI-generated.”

The Daily Express removed both the images from one of its articles on the earthquake, and added a note saying “a previous version of this article included photographs which were later discovered to have likely been created by AI”. (However at the time of writing, one of the pictures is still being used as the featured image for its live feed on the earthquake. Full Fact has asked the Daily Express about this, and will update this article if we receive a response.)

The Times and Sun did not include a note about the images being removed.

As AI-generated content becomes increasingly convincing, and when images can spread quickly online, it’s important that media organisations thoroughly verify all images. When errors are made, Full Fact expects media organisations to take decisive and transparent steps to address them.

We’ve written a guide with tips on how to spot AI-generated images yourself.