A fact checker's guide to spotting if something is AI

What do the following three things have in common: amazing scenes of ‘miracle houses’ that survived the LA wildfires, a picture of Israeli soldiers taken prisoner by Hezbollah, and an image of a young Donald Trump with convicted sex offender Jeffrey Epstein?

The answer? There’s no evidence any of these images are real. All were almost certainly created using Artificial Intelligence (AI), but have gained traction on social media after being shared widely, including by many people who believe they are genuine.

Rapid advancements in AI technology have meant that identifying these kinds of images and videos as fakes is becoming increasingly difficult.

At Full Fact we regularly debunk this kind of misleading content as part of our work tackling the spread of harmful misinformation on social media platforms.

However, we often end up having to caveat our assessments—and saying that such content is “likely” or “almost certainly” AI-generated.

That’s because in many cases we cannot absolutely prove beyond all possible doubt that it’s not real footage or a photograph that has been edited or digitally created in some other way.

This is especially hard with deepfake audio content, where even the experts can struggle to distinguish between clips produced using AI and the real thing.

There are online tools that say they can be used to verify for certain whether something is an AI creation. But so far we have not found these tools to be reliable, and we don’t routinely use them in our fact checking of suspected digitally-created content.

Instead we stick to tried and tested methods of fact checking detective work—analysing the media itself, using reverse image search tools, asking whether a given scenario is plausible, and looking for inconsistencies.

We also regularly speak to experts in the field, such as Professor Hany Farid, who specialises in digital forensics and image analysis at the University of California, Berkeley, to help determine whether something has been dreamt up by algorithm from a human prompt.

Join 72,953 people who trust us to check the facts

Sign up to get weekly updates on politics, immigration, health and more.

Subscribe to weekly email newsletters from Full Fact for updates on politics, immigration, health and more. Our fact checks are free to read but not to produce, so you will also get occasional emails about fundraising and other ways you can help. You can unsubscribe at any time. For more information about how we use your data see our Privacy Policy.

Six fingers and missing legs

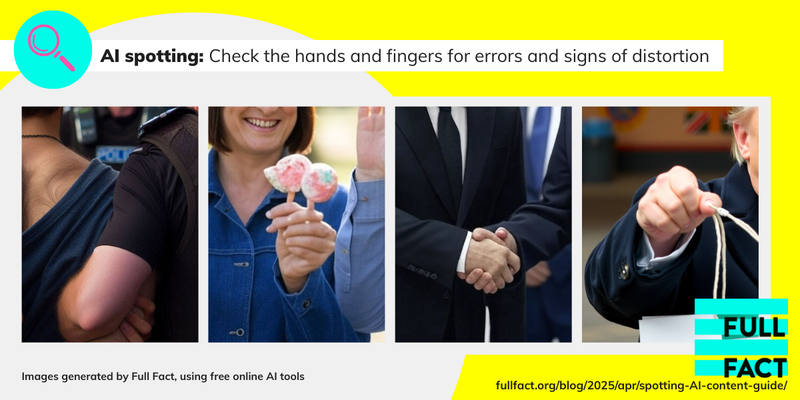

As we’ve written before in our guide to spotting AI-generated images, there are classic mistakes that even increasingly sophisticated AI tools often continue to make when generating images and videos of people.

Fingers, arms, ears, toes and teeth are often parts of the body where errors may appear—sometimes the people depicted have too many, and other times not enough.

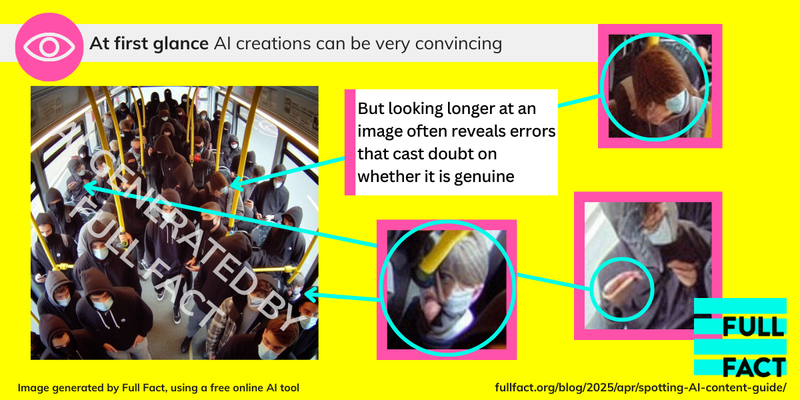

But at first glance you might miss these discrepancies.

For instance, an image of a younger Donald Trump apparently sitting on a sofa with Jeffrey Epstein and several young women, which we fact checked in January, initially looks genuine—when I loaded it up onto my computer screen my partner asked when it had been taken, as it had a convincing retro finish similar to archive film photographs.

But a closer inspection revealed multiple inconsistencies that convinced us it wasn’t real, including that Epstein appeared to have no legs.

In another image allegedly showing four Israel Defense Forces soldiers with their hands tied behind their backs who had supposedly been captured in southern Lebanon, the hands of the men had been deliberately obscured with blurring, presumably to hide similar errors.

But there were other mistakes that would not appear in a real photograph, such as an unnaturally long foot which merged with the floor, and a rifle which had barrels at both ends.

Before sharing striking images like this you find online, we advise taking a second to look even closer.

Hidden and visible watermarks

AI tools have become increasingly accessible in recent years—particularly with the roll-out of Grok, a generative AI chatbot integrated into X (formerly Twitter), and other publicly available tools which allow you to quickly create images and videos from written prompts.

While this ease of access has resulted in much more AI-generated content circulating, it has also given us more opportunities to debunk them.

Right now, Grok images made through X, as well as some videos created for free using other AI software such as Sora (the text-to-video generator from OpenAI, which created ChatGPT) and Runway, contain visible watermarks showing where they originated from, which can give us strong evidence that even the most convincing content isn’t genuine.

We’ve fact checked misleading content being shared about the Myanmar earthquake, Canadian politician Mark Carney, protests in Turkey and ousted Syrian President Bashar al-Assad this way.

But it’s worth remembering these watermarks can easily be cropped away before an image is shared. And if you pay to use an AI tool, content can also often be generated without a watermark.

Some AI-generated images even contain hidden watermarks. We spotted such a watermark on a viral image we checked in February which appeared to show masked men carrying axes in a hospital—some people online said this showed an incident which they claimed had happened in Birmingham.

When we put the image through Google’s reverse image function, under the ‘about’ section it revealed it was flagged as “Made with Google AI”. Google had previously told us that content produced with its AI products contains an embedded ’SynthID’ digital watermark that is not visible to the human eye. This watermark can be used to identify content which comes from Google’s AI tools, though Google told us the presence of a watermark can't tell us whether AI was used to completely generate a brand-new image or just modify an existing one.

For even more tips like this, check out our guides to spotting deepfakes and AI-generated images.

This article is part of the #FactsMatter campaign, which is highlighting the work we do at Full Fact and why we believe it matters. Over the course of the campaign we’ll be talking about how we check facts, the challenges we face in getting to the heart of evidence and the difference we can make when we do so.

We’re asking people to share what we publish, sign up to our newsletter and tell the world why #FactsMatter more than ever. Find out more about the campaign and how you can support it here.